Using LLMs

Using AI to integrate Wert

Wert documentation is now AI-enhanced. Whether you’re using ChatGPT, Claude, or a custom LLM integration, we’ve made it easy to feed Wert docs directly into your models and even easier to obtain relevant answers.

Disclaimer

While LLMs are powerful tools for interpreting documentation, they may occasionally generate inaccurate or outdated code snippets. Always verify AI-generated implementation details against the official Wert documentation or Technical Support Staff before deploying to production.

LLM Files

To help LLMs stay current on how Wert works, we expose two files for ingestion:

- llms.txt - A concise, list of top-level docs pages, great for smaller models or quick context building.

- llm-full.md - A more exhaustive listing that includes all pages, ideal for full-context indexing.

Copy the content and pass it to LLM of your choice. You can regularly feed the content into your custom GPTs or other LLM apps to ensure Wert-specific questions are accurate technical detail.

Targeted Page Context

For more granular queries, you can feed a specific page’s link or its raw text directly into your LLM. This is the most efficient way to get precise technical answers for a single integration step without over-saturating the model's context window.

You can provide context in two ways:

-

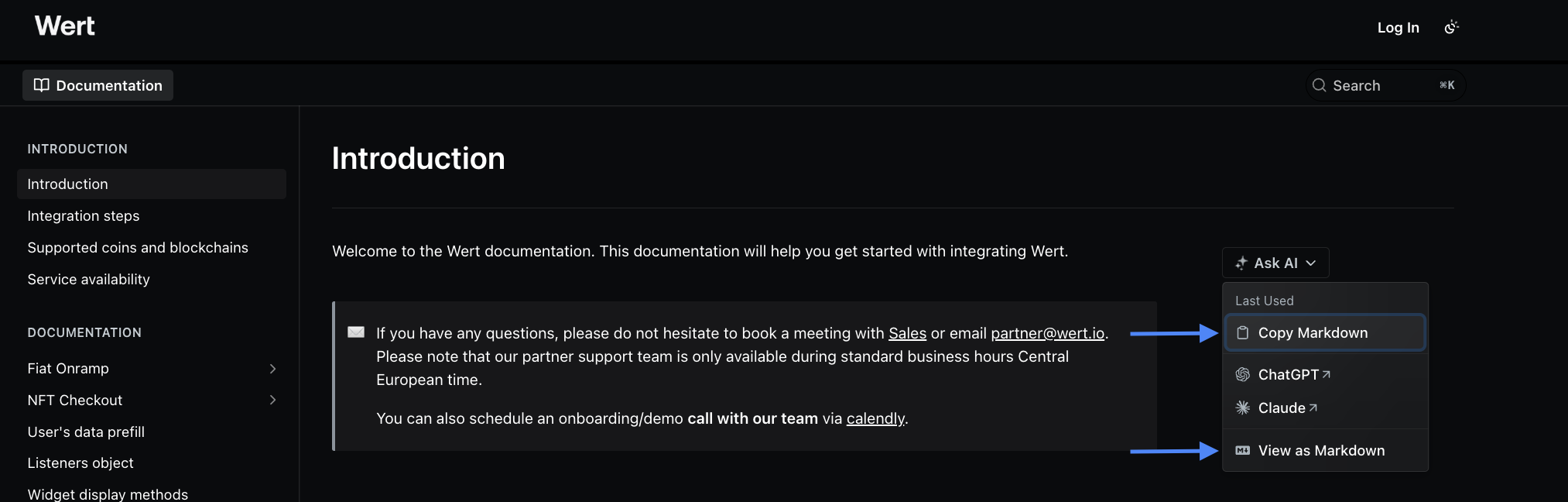

Share the Markdown Link: Open the URL of any specific documentation page by clicking on "View as Markdown" and provide the content to LLMs with web-browsing capabilities. See image below.

-

Copy Markdown: Use the "Copy Markdown" feature on any page to grab the raw Markdown text and paste it directly into your chat.

Updated about 12 hours ago